Autonomous driving positioning technology is to solve the "Where am I" problem, and puts very high requirements on reliability and safety. In addition to GPS and inertial sensors, we usually also use LiDAR point clouds to match high-precision maps, and visual odometry and other positioning methods to allow various positioning methods to correct each other to achieve more accurate results. With the development of autonomous driving, positioning technology will also be constantly optimized.

Generally speaking, autonomous driving actually contains three questions: One is where am I? The second is where am I going? Three is how to go? The complete solution to these three problems is true autonomous driving.

Positioning technology is to solve the "Where am I" problem, and what is needed for autonomous driving is centimeter-level positioning.

At present, the technology of autonomous driving basically comes from robots. Self-driving cars can be regarded as wheeled robots plus a comfortable sofa. Positioning and path planning in robot systems are a problem. Without positioning, you cannot plan paths. Centimeter-level real-time positioning is one of the biggest challenges of autonomous driving. For robot systems, positioning mainly relies on the cross-contrast between SLAM and Prior Map.

SLAM is the abbreviation of Simultaneous Localization and Mapping, which means "instant location and map construction". It refers to the process of building an environment map while calculating the position of the moving object based on the information from the sensor.

Due to the different types of sensors and installation methods, the implementation and difficulty of SLAM will vary greatly. According to the sensor, SLAM is mainly divided into two categories: laser and vision.

Autonomous driving can accurately perceive its relative position in the global environment through positioning technology, regard itself as a particle and combine it with the environment.

According to different positioning technology principles, it can be divided into three categories. The first category, signal-based positioning, is representative of GNSS positioning, that is, the global navigation satellite system; the second category, track estimation, relying on IMU, etc., inferring the current position and orientation based on the previous position and orientation; the third category It is environmental feature matching. Based on LiDAR positioning, the observed features are matched with the features in the database and the stored features to obtain the current vehicle position and attitude.

The existing high-precision positioning of unmanned vehicles may cause inaccurate positioning in some cases. Therefore, the reliability of the positioning scheme relying only on GPS is too poor.

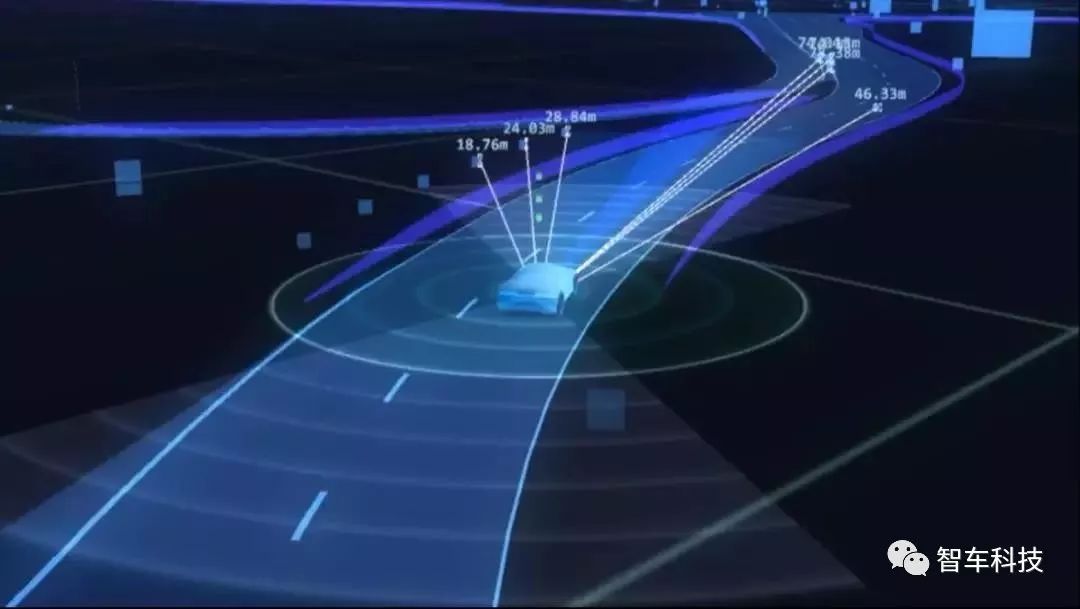

Therefore, automatic driving generally uses combined positioning. First, ontology sensing sensors such as Odometry, Gyroscopes, etc., determine the current robot's pose by measuring the distance and direction relative to the robot's initial pose by giving the initial position and posture (referred to as pose). , Also known as flight path speculation. Then use lidar or visual perception of the environment, and use active or passive identification, map matching, GPS, or navigation beacons for positioning. Position calculation methods include triangulation, trilateration and model matching algorithms. From this perspective, the IMU is also a necessary component for autonomous driving.

The inertial sensor (IMU) is a high-frequency (1KHz) sensor that detects acceleration and rotation. After processing the inertial sensor data, we can obtain the displacement and rotation information of the vehicle in real time. However, the inertial sensor itself also has deviations and noise. . And by using Kalman filter-based sensor fusion technology, we can fuse GPS and inertial sensor data, and take advantage of each to achieve better positioning effect.

Note that since unmanned driving requires very high reliability and safety, positioning based on GPS and inertial sensors is not the only positioning method in unmanned driving.

As far as the current positioning technology is concerned, there are three types of positioning methods for automatic driving. Usually, the three methods are used crosswise to correct each other to achieve a more accurate result:

Sensor fusion based on GPS and inertial sensors;

Based on LiDAR point cloud and high-precision map matching;

Road feature recognition based on vision.

These three types of positioning methods belong to the need to combine multiple sensors to solve the positioning problem. The following are several specific positioning methods:

1. The general positioning method in the industry is GPS + high-precision map + camera (lidar, etc.) information fusion positioning method.

The SLAM of the lidar uses the GPS and IMU of the vehicle to make an approximate location judgment, and then compares it with the pre-prepared high-precision map (Prior Map) and the lidar SLAM cloud point image, or Registration, placed in Do the registration in the coordinate system. Confirm the location of the car after the matching is successful. This is currently the most mature and accurate method.

First of all, according to the GPS data (longitude and latitude and heading), determine which road the unmanned vehicle is on. This position may be 5 to 10 meters away from the real position.

The distance between the lane line (dashed and solid line) and the road edge (roadside or guardrail) detected by the onboard sensor is compared with the lane line and road edge provided by the high-precision map, and then the lateral positioning of the unmanned vehicle is corrected.

According to the billboards, traffic lights, wall signs, and ground signs (stop lines, arrows, etc.) detected by the vehicle sensors, they are matched with the same road characteristics (POI) provided by the high-definition map, and then the longitudinal positioning and heading are corrected. When no road features are detected, short-term position estimation can be performed through dead reckoning.

The positioning algorithm of unmanned vehicles usually adopts the method of particle filtering. It takes multiple calculation cycles before the positioning results converge, thus providing a relatively stable positioning result. I will introduce the principle of particle filtering algorithm in the subsequent series of articles.

2. Image-enhanced positioning. Lidar is usually combined with the vision system for positioning. This method requires preparing a 3D map made by lidar in advance, using Ground-Plane Sufficient to obtain a 2D pure ground model map, using OpenGL to transform the monocular visual image and this 2D pure ground model map through coordinate transformation, and using Normalized mutual information (normalizedmutual information) registration. Then use extended Kalman filter (EKF) to achieve positioning.

3. Scan the image with the intensity of lidar. Lidar has two basic imaging methods. One is 3D distance imaging, which can be roughly understood as a point cloud. The other is intensity scanning imaging. The laser is reflected by the object. According to the difference in reflection intensity value, a pair of intensity imaging images can be obtained. . The intensity value is included in the point cloud, one of the core technologies of light intensity separation. This positioning method needs to make a special SLAM system in advance, called Pose-Graph SLAM (Pose-GraphSLAM), which can barely be regarded as a high-definition map made by lidar. There are three constraints, one is scan matching constraint, the other is odometer constraint, and the third is GPS prior constraint. The lidar 3D cloud point map extracts the intensity value and the real ground (Ground Plane), and converts it into a 2D ground intensity scan image. It can be positioned after pairing with the pose image SLAM.

In addition, the Gaussian mixture map can also be used for positioning, that is, in harsh environments, such as thick snow, muddy roads with residual snow after the snow, and old damaged roads lacking texture, use the Gaussian mixture model to do Positioning improves the robustness of lidar positioning.

4. REM proposed by Mobileye. REM is a positioning method that does not require SLAM, but it is obviously only a variant of visual SLAM. Mobileye obtains a simple 3D coordinate data by collecting "landmarks" including traffic signals, direction signs, rectangular signs, street lights and anti-cursors. ; Then obtain rich 1D data by identifying lane line information, roadside, isolation zone, etc. Adding simple 3D data and rich 1D data, the size is only 10Kb / km, and the image of the camera can be located by matching this REM map. Mobileye's design is undoubtedly the lowest cost, but the premise is that at least tens of millions of vehicles are equipped with a REM system, which can automatically collect data and upload it to the cloud. On some road sections or non-road areas, there is no vehicle with a REM system After that, it cannot be located.

However, this method makes people have the following doubts:

It is impossible to let REM system vehicles travel every inch of the land on a global scale. This may involve privacy issues, as well as data copyright issues. Who belongs to the copyright of these data, is it the car owner or the car company or the cloud service provider, or Mobileye? This question is difficult to clarify.

At the same time, the data of REM should be updated in time, almost to achieve a quasi real-time state, and the light has a significant impact on the data. REM must filter out the inappropriate data, so maintaining the effectiveness of this map requires a very large amount of data and calculation , Who will maintain this huge computing system?

The most fatal point is that REM is based on vision. It can only be used when the weather is fine and the light change is small, which greatly limits its practical range, and Lidar can meet 95% of road conditions.

The above are just general common positioning methods. Of course, there are multiple specific positioning methods, and multiple sensors can be randomly combined according to the positioning method. The positioning accuracy of the fusion scheme will be better than that of a single sensor. If a sensor fails in a certain environment, the supplementary sensor can be on top. For example, some common multi-sensor fusion positioning methods on the market are:

1. Autopilot GPS + IMU + odometer

The global anchoring given by GPS can eliminate the cumulative error problem, but its update frequency is low and the signal is easily blocked. IMU and roulette odometer have a high update frequency, but there is a problem of cumulative error. The easiest thing to think of is to receive GPS positioning. Using GPS position information, the error is the accuracy of GPS. In the next GPS positioning interval, use IMU (angle Accumulation) and odometer (displacement accumulation) for posture accumulation, the middle pose error is the accumulation of the initial GPS positioning error and the middle accumulation error.

The improved method is to use nonlinear Kalman filtering. When GPS position information is received, the cumulative predicted value of the IMU and odometer and GPS observations should be combined to calculate a better position estimate with error convergence.

2. Autonomous driving GPS + multi-line radar + high-precision map matching

GPS gives a global anchor, and the radar SLAM front-end odometer is used for accumulation in the middle. It can cooperate with the map matching of high-precision maps and do a similar back-end loop optimization method to fuse GPS, lidar and known maps.

3. Multi-pair binocular vision camera SLAM solution for autonomous driving

This kind of solution has low cost, and the algorithm is more sophisticated. There are few autonomous driving companies claiming to focus on pure visual solutions, which is not mainstream now.

4. Single line radar + IMU + odometer fusion

To meet the requirements of indoor positioning, personal understanding can be divided into shallow integration and deep integration. Shallow fusion uses the accumulated value of IMU + odometer as the initial value of the radar odometer. Based on this initial value, continuous frame scan matching will greatly speed up the matching speed. Deep fusion will combine the values ​​of IMU and odometer as constraints and apply them to the correction of back-end loop constraints.

5. Depth camera + IMU fusion

At present, there are initial signs of VR applications in mobile phones, such as Apple's IphoneX and Google's Tango project that has been released for some time. Deep vision SLAM and IMU are deeply integrated to achieve a relatively good VR experience.

Unmanned driving has very high requirements for reliability and safety. In addition to GPS and inertial sensors, we usually use LiDAR point cloud and high-precision map matching, as well as visual mileage calculation and other positioning methods to allow various positioning methods to correct each other. In order to achieve more accurate results. It is believed that with the development of autonomous driving technology, future positioning technology will not be continuously optimized.

We've been around for over 16+ years. We make sure our sound is The Best Sound.

Our products include gaming headset, Bluetooth Earphone, Headphones Noise Cancelling, Best Wireless Earbuds, Bluetooth Mask, Headphones For Sleeping, Headphones in Headband, Bluetooth Beanie Hat, bluetooth for motorcycle helmet, etc

Manufacturing high-quality products for customers according to international standards, such as CE ROHS FCC REACH UL SGS BQB etc.

We help 200+ customers create custom Bluetooth headphones, earbuds, earphones, etc audio products design for various industries.

Customized Headphones, personalized gifts, promotional products custom , Bluetooth Earphones,Best Headphones

TOPNOTCH INTERNATIONAL GROUP LIMITED , https://www.micbluetooth.com